Since my fieldwork I’ve had to do lots of data entry. I finished entering the bulk of the data from my notebooks into a spreadsheet today, which comes to a total of about 290564 cells of data, although that number is a bit exaggerated as some of the columns have a lot of blank cells. In any case it’s a lot of data. I’m trying hard to make as much of my data entry and cleaning process repeatable and reproducible, in the hope that if I find any mistakes they will be easier to fix if I have an explicit record of how I entered the data.

I used Microsoft Excel to input the data and there were a few things that got in the way when Excel was trying to be helpful. For example the GPS codes generated by my Garmin GPS unit are sometimes formatted with leading zeroes, e.g. “028”, “0592”, “10391”. Excel automatically formats these codes like so: “28”, “592”, “10391”. This means that when I want to match the GPS coordinates from a CSV file based on their IDs, it doesn’t work. Additionally, I found that when I exported the spreadsheet as a CSV file and then imported it into R for analysis, some of the numerical values where slightly different, for example, “4.6” might be changed to “4.5699999997”. This is easy to fix in R with round(x$col, digits = 1), but it really makes me worry that some other data might be lost or altered in subtle ways that I don’t notice until it’s too late.

So, I’ve been trying to find a better way to input data. Here are a few options I’ve come up with:

It is possible to pre-format columns in Excel so it just accepts the input you give it. Open Excel, highlight the relevant columns and right click, click “Format Cells…”, then change the category to “Text”. Additionally, I could choose to only save as CSV files when exporting from Excel.

Another option is to use a more simple CSV editor rather than Excel, something that doesn’t try to be helpful. Table Tool seems like an alright option, but I’m not a huge fan of the way this program manages data entry, there’s a lot of mouse clicking needed to activate a cell for editing and you have to manually click to add or delete rows.

A final option which really appeals to me is using Vim to write CSV files with the help of some plugins. Obviously Vim can edit CSV files without anything extra, as they’re just plain text, but this gets cumbersome when you have rows of different width, blank cells etc., as the columns become offset. This makes moving between the rows in the same column difficult. Luckily there is a plugin called csv.vim

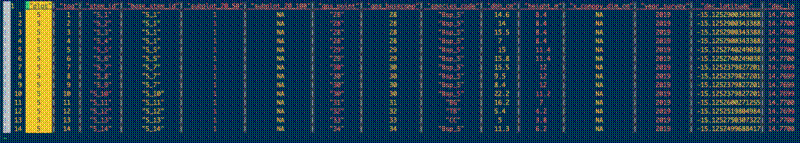

which has some really nice features which make editing CSV files in Vim quite easy. Firstly, the plugin detects the column delimiter and replaces it with a |, which makes distinguishing column boundaries a lot easier. Also, columns are highlighted in different colours, which also improves the ability to scan the CSV down a column. You can also set Vim to highlight the current column with let g:csv_highlight_column = 'y'. Movement across rows and columns can be achieved with “H,J,K,L” which will be familiar to Vim users. The most important feature for me is the ability to visually align the columns with :ArrangeColumn. Below is an example of how this works:

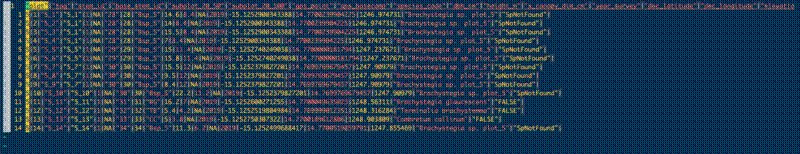

Un-arranged columns

Arranged columns with ArrangeColumn

There are a tonne of other features in csv.vim but most of the features related to analysis I’d much rather do in R later on. Deleting, hiding and sorting columns could come in really useful however, so I’ll spend some time trying to figure out the commands for that. I’ve actually had a different CSV plugin for a while already, called rainbow_csv

, which helpfully colours the text in different CSV columns to help to distinguish one column for another. I’ve since removed this plugin however, as csv.vim does the same thing but adds lots of other features.